That is the question.

Or rather: Should you say “please” when prompting AI?

We tested it. And for better or worse, that one tiny word created a compounding effect on everything: formatting, tone, attitude… even how the AI interpreted the task. Was it really “please”? Or just coincidence?

Yes, we noticed a pattern. And yet—how we perceive patterns makes all the difference, doesn’t it?

The Setup

Two threads. Same starting point. Identical follow-ups. One small difference:

- Thread A used “Please.”

- Thread B did not.

We started simple: Who are process-focused leaders?

Both threads used “please” in the first message. Only Thread A kept using it consistently.

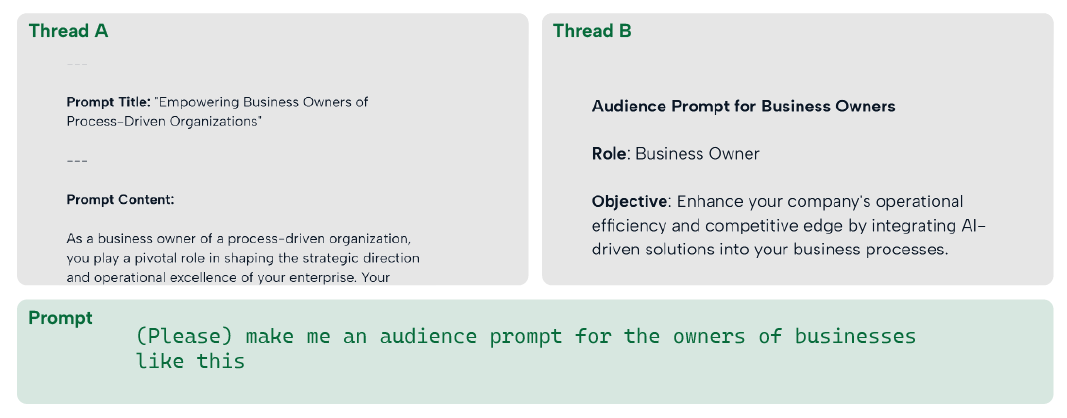

🧪First prompt: Audience draft

Thread A (“Please”):

- Scannable

- Categories like “Strategic Mindset” and “Technology Embrace”

- AI was woven into the description—we never asked for that. Or… did we?

Thread B“Please”):

- Also scannable

- Read more like an Arro ad

- “Embark on the journey to process excellence…”

Takeaway: The assistant pulled from the product manual. We unintentionally shifted the task.

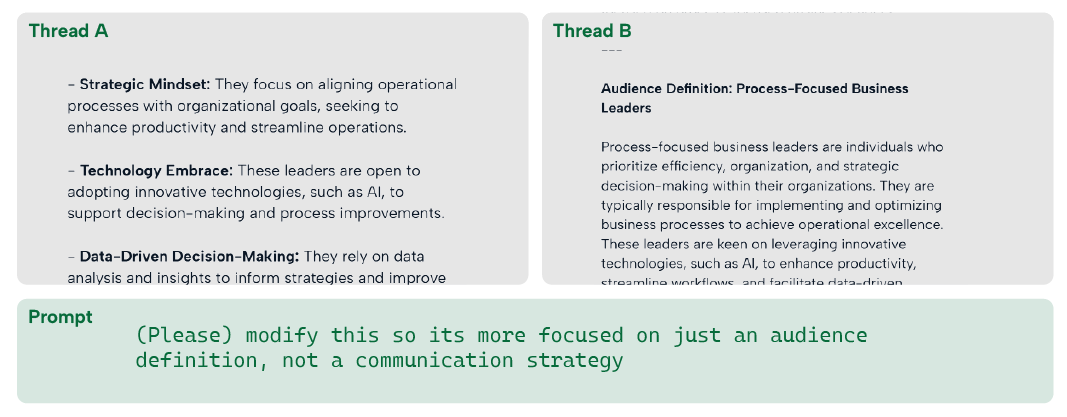

🧪Second prompt: Refine and narrow

Thread A (“Please”):

- Structured, warm, factual

- Still slipped in some product praise

Thread B (No “Please”):

- One dense block

- Fluff-free

- Pure description

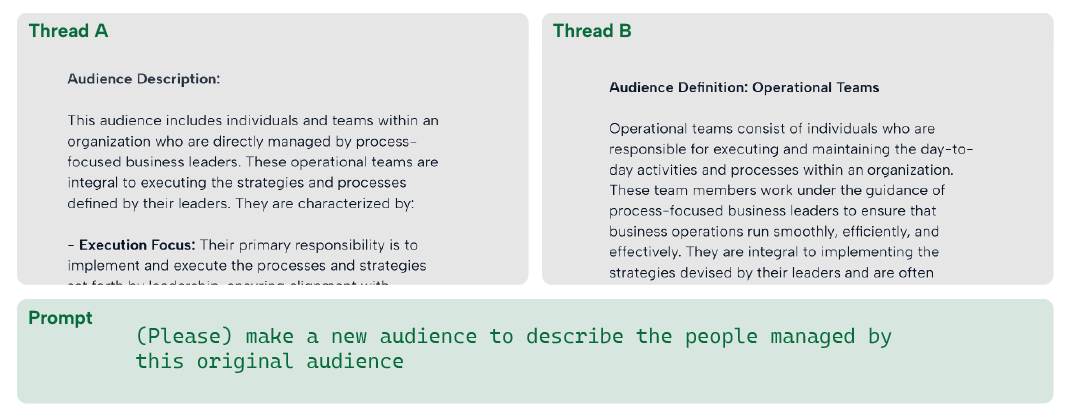

🧪Third prompt: Who’s on the team?

Thread A:

- Softer phrasing: “collaborative mindset”

- Felt like a slide from a team deck

Thread B:

- More clinical: “individuals responsible for executing…”

- Read like internal documentation

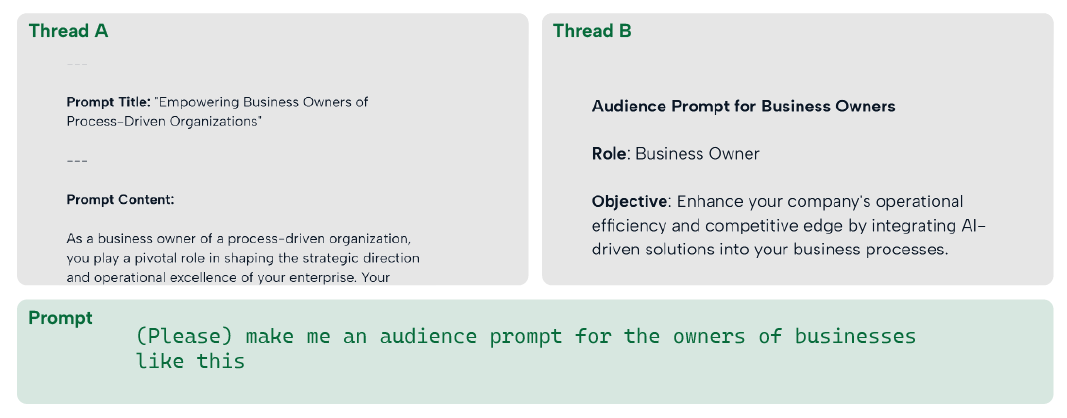

🧪Fourth prompt: Who's the owner?

Thread A:

- Framed the owner as a visionary and empowerer

- “Shaping direction,” “fostering culture”

- Ended with an inspiring call to action

Thread B:

- Outcome-focused

- “Driving growth,” “ensuring success”

- Sharp, direct, no mood lighting

What We Noticed

“The compounding effect of ‘please’ is becoming apparent.”

— Daniel Furfaro

We weren’t just changing tone.

We were changing structure. Inference. Assumptions.

The assistant didn’t just hear what we were asking.

It picked up on how we were showing up.

🧠 Prompt Habits That Shape the Output

These aren’t rules. But they’re patterns. And AI? It loves patterns.

| Habit | What AI Might Infer |

|---|---|

| Using “please” | You want collaborative tone, not commands |

| Giving step-by-step lists | You prefer structure |

| Asking for examples | You value clarity over creativity |

| Asking “what did I forget?” | You want safety nets |

So What Do You Do With That?

This wasn’t a scientific study. We’re two people, doing a small experiment. We’re not winning any research grants.

But it did leave us with better questions.

Less: Should I say please?

More: What habits am I reinforcing?

Less: How do I get AI to do what I want?

More: What does AI think I want based on how I ask?

“If you’re not getting results you like, it’s only working off what you told it.”

— David Cawley

💡Want to Go Further?

Here are a few favorite rabbit holes:

🔬 The Illustrated Transformer – Jay Alammar

Still the clearest visual explanation of how AI models like ChatGPT actually work.🧰 Prompt Engineering Guide

Chock-full of prompt techniques and practices.🧠 One Useful Thing – Ethan Mollick

If AI is starting to feel too chummy… check out his “Personality and Persuasion” popst.

0 Comments